4 Lessons Learned from Building AI Products

Fundamentally, both types of roles leverage the same core product management skills. But what are the nuances?

As AI products continue to surge in popularity thanks to both endless VC investments, internet hype, an endless stream of use cases, and a seemingly boundless set of possibilities, the practice of product management has also experienced subtle changes. But while the fundamental skills needed by a product manager (PM) to drive their product to success haven’t changed, there is a clear difference in how all AI product development should be approached.

1. Best Practices for Product Development are still being defined & refined.

Traditionally, technologies such as B2B SaaS, mobile apps, and e-commerce have better-established best practices and guidelines when it comes to both product development and product iteration. Here are a few examples:

In enterprise B2B SaaS, depending on the use case, customers (such as clients and partners) often have an idea of the value and the problem they’re trying to solve. Engineering teams can work with customer-facing teams to ensure those requirements are translated into a product via modern or traditional development cycles.

In many other traditional industries, product shape or form can vary but can follow the same underlying set of methods: some form of scrum or kanban, a backend engineering team, a frontend engineering team, a designer, and a product manager. From there, they would follow an iterative process of ideation and requirement gathering, backlog triaging, running sprints, and tracking dependencies. This method allows for use case discovery to be scrappy, iterative, and agile.

As you’ll soon see in lesson learned #2, the challenge of discovering the best “killer use cases” becomes apparent in the AI space. Many development teams follow the same model of leveraging some sort of large language model (LLM) in their product, with an underlying “platform” either developed in-house or borrowed from an AI platform such as Azure AI or AWS Bedrock. Some products even have multiple LLMs (large language models) that focus on specific use cases depending on what’s asked by the user (the prompt). These can be developed in-house or found through enterprise solutions in the form of “AI agents” (such as the Generative AI Agents by Oracle).

Because of this nuance, product development practices for AI-powered products remain a blur. Questions targeting engineering operations or the product life cycle still remain unclear. For example:

Should we have a dedicated backend team only focused on building these “AI agents?”

How do we operationalize these AI agents and monitor them?

Who helps monitor and foot the bills for the LLMs being used — or how do we even divide the costs reasonably?

Because of the lack of clarity on “killer use cases,” do we focus more time than normal on product discovery instead of delivery?

Best practices for product development remain a gray cloud, but as more organizations adopt AI-powered solutions, we’ll all collectively approach the light in the tunnel.

2. Depth of Use Cases > Breadth of Use Cases

This may sound like common sense in the world of product, but there’s a certain “AI flavor” that differentiates how PMs would approach product development.

With LLM models now widespread and generally available across numerous platforms and consumable through public APIs, thousands, if not millions, of tech startups have ventured into the AI world in hopes of solving a key set of problems. The nuance begins right at the beginning of problem discovery: PMs soon find ourselves in the deep end, finding out that LLMs aren’t solving for their initial “brilliant” idea as well as they thought, and thus begins the “iterative testing cycle” with AI.

Many teams attempt to solve one idea at a time with AI, failing fast and receiving feedback from customers as they go. In general, many use cases — especially those in the software productivity space — tend to fall into the following categories:

“Needle in the haystack” discovery. For example, “What work item should I focus on today?”

Breaking work down into smaller components.

Summarizing all content into a simplified message.

General help guidance is based on the provided documentation. This one is interesting, as it usually relies on an AI-based technique called RAG, which stands for retrieval augmented generation. Generally, content stored in a repository of sorts would be referred to and “retrieved” by the AI bot so it could use it as a reference in its answers. An example would be, “What are some best practices to do XYZ?”

Some sort of niche “action” that the AI product can help do for you as part of a larger overall user flow or process. Imagine a developer trying to create a new repository structure for a newly defined web project — and then some form of AI creating all those code files for them to replace the otherwise manual effort needed.

But developing products with generative AI (GenAI) inherently introduces a dependency on a clear “AI-enabled” use case. The problem lies with GenAI itself: with such a powerful technology and the amount of data trained to develop these LLMs, it becomes unclear where the true value and set of use cases lie.

Just look at Atlassian’s newly announced Rovo or Microsoft’s 365 Copilot. Each AI-enabled Copilot aims to solve a certain set of use cases, but it’s clear that some use cases are better performed by AI than others. Time will tell as more players in the industry continue to discover new abilities and niches that AI can conquer.

3. Work Diligently with Higher Context Windows.

Before I dive in, let’s educate everyone on what context windows are in relation to LLMs. When a user types a prompt and submits it to an LLM (such as the textbox you can type into in ChatGPT), the amount of content you can type in constitutes the context window. It represents the amount of context — or information — you’re providing the model for it to refer to when it generates its response. A simplified example would be:

Today’s temperature is 30 degrees Celsius. Tomorrow, it’s 80 degrees Fahrenheit. Now tell me, what’s the difference in temperature between today and tomorrow?

That first line — the one depicting information about today’s and tomorrow’s temperature — sets the context for the main question you’re asking.

Even with Google Gemini releasing a 1 million context window as of May 2024, it’s important for many PMs and AI developers to understand the retrieval limitations AI still faces — especially in products that absolutely must not make mistakes.

More content being thrown into an LLM, either within the context window, through the RAG, or both, will mean more data for the model to sift through and filter. This ultimately lessens the chance of the model finding the most optimal pieces of information that ultimately provide the highest value based on the user’s question. What many developers will find is that while AI exhibits immense creativity with the data it’s fed, it can often result in inconsistent answers if the pool of information becomes too vast. Another common side effect when dealing with large datasets (and thus larger context windows) is the sheer amount of time needed for AI to process everything to generate a response.

Working with a product team developing an AI-based Copilot myself, I’ve witnessed this first-hand. There’s both a dependency on computing resources and the speed at which the model can process the large amount of data, and answers can often take well over a minute to generate, depending on the size. A 1 million context window introduces numerous opportunities for the model to discover certain patterns or key points (thus the “needle in the haystack” use case), but it faces a battle against both the time it takes as well as the consistency of answer quality.

4. We’re all dependent on Compute & Infrastructure.

There are so many dependencies to track and make note of when building AI products, to the point where more are being discovered every day. With the obvious one being the need for tokens, GPUs, and computing power, let’s look at some that founders and PMs will have to watch out for:

Fighting for PTUs (provisioned throughput) when using third-party LLM infrastructure. This type of throughput within a deployment of an AI model determines the amount of processing power you get (and thus the number of machines allocated to you) when using an AI product. For example, if you’re borrowing Azure AI’s infrastructure and using the GPT4 API, you may have to pay for additional PTUs to get faster processing time and, thus, faster response time in answer generation.

Token processing costs.

API availability/uptime.

Costs: both LLM and infrastructure costs.

I’ve really only scratched the surface; there is a plethora of dependencies to think about when leaning on third-party LLM APIs, computing power, and infrastructure. Almost all startups and side projects rely on these (now widely available) services, so it’s important to calculate the risks of cost, API uptime, and, of course, processing power.

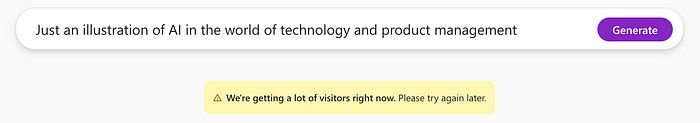

Sometimes, it could result in the AI service being overloaded with users trying to process the models simultaneously, resulting in the service being unable to generate a response at times. Just look at Microsoft Designer below at 11 PM PT on a Wednesday evening:

You’re fighting for your spot in line!

About Me

My name is Kasey Fu. I’m passionate about writing, technology, AI, gaming, and storytelling 😁.

Follow me on Medium for more passion, product, gaming, productivity, and job-hunting tips! Check out my website and my Linktree, and add me on LinkedIn or Twitter, telling me you saw my articles!